Submitted by lexa on

Lossy compression of raw data is currently the only option available in Sony cameras of series NEX, SLT, RX, ILCE, ILCA, and the recent DSLR-A. The first part of this article is showing how to detect artifacts caused by this compression. We will be discussing the technical details of this compression in the second part of this article.

In the vast majority of cases, the compression artifacts are imperceptible unless the heavy-handed contrast boost is introduced. There are, however, exceptions. With some unlucky stars in alignment, the artifacts can become plainly visible, even without much image processing. All that is necessary for the artifacts to threaten the quality of the final image is a combination of high local contrast and a flat featureless background.

Lets have a look at the example provided by Matti Koski, it is the same example that was published by Lloyd Chambers in his blog. Upd.: Many thanks to Mr. Koski who now gave us his permission to link to the original raw file _DSC0035-startrails.ARW

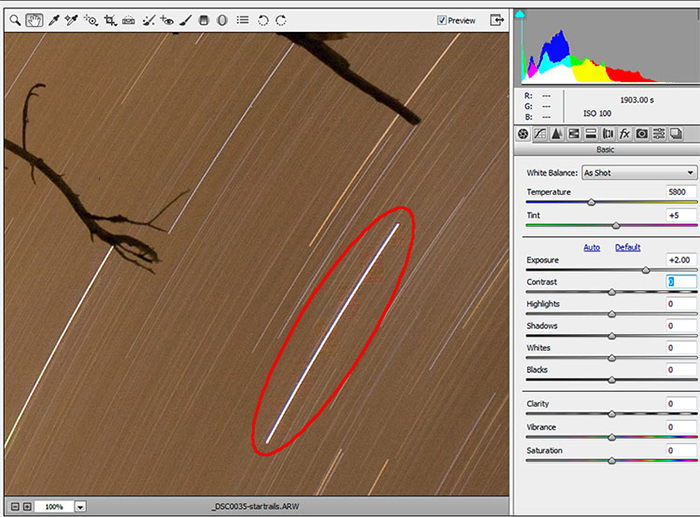

The artifacts in the form of a horizontal color fringe on both sides of one of the star trails are very visible even if all of the sliders are set to zero, except the exposure slider:

Figure 1. Star Trails. Shot opened in Adobe Camera Raw

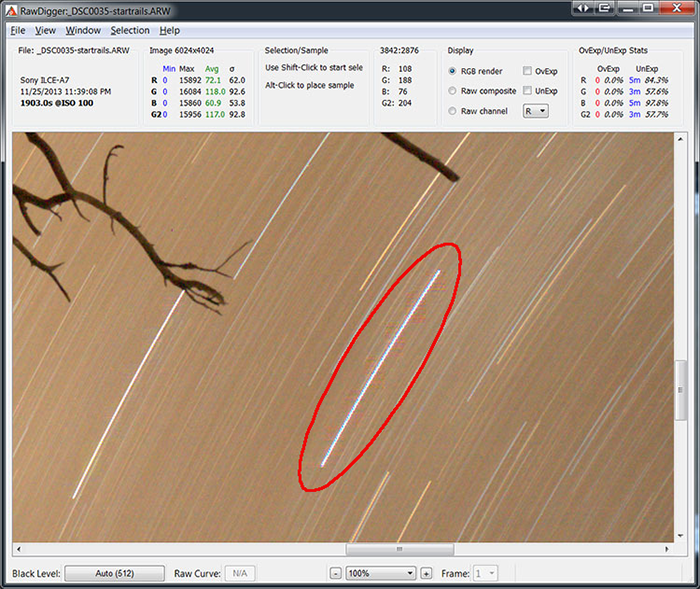

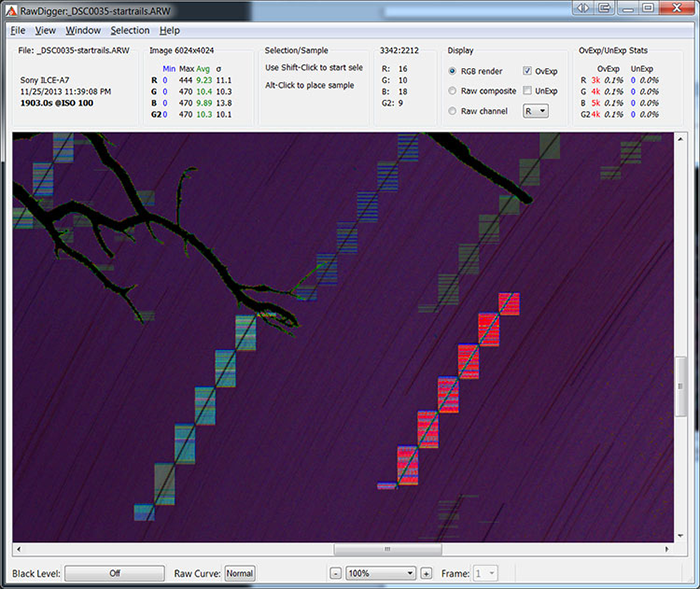

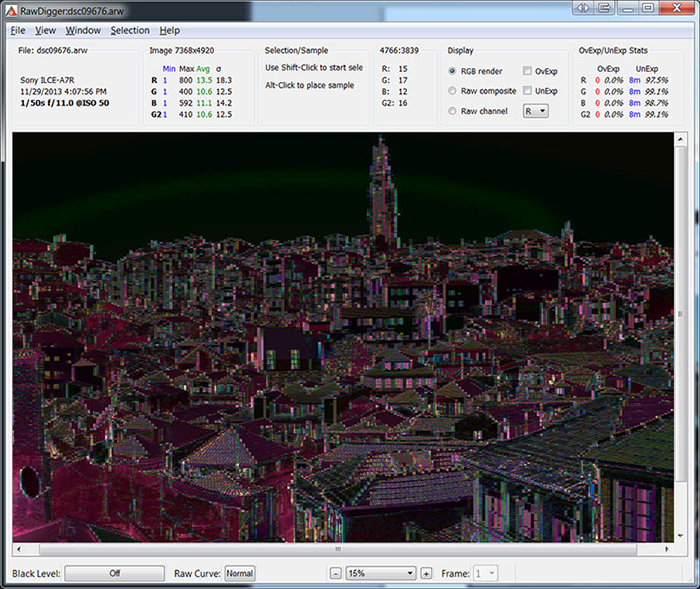

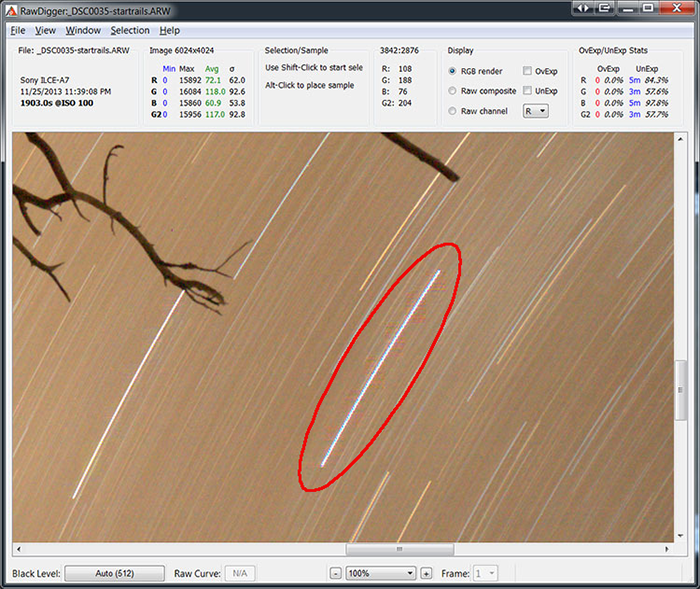

This problem is even more visible in RawDigger, because for display purposes, RawDigger employs a variant of Auto Levels, when the tones of the image are stretched to occupy the whole tonal range:

Figure 2

What we have here is a clear case of posterization, which is the lack of continuous gradation of tone, or, in other words, color quantization. It is caused by a sort of rounding error specific to the compression scheme Sony are using for there cRAW format. This error occurs when the step between values in the 16-byte block being too crude (a detailed explanation of the mechanism is in the «Inside SONY cRAW format» part of this article, below). As a result of this rounding error, the artifacts are visible even without moving the contrast slider to the right.

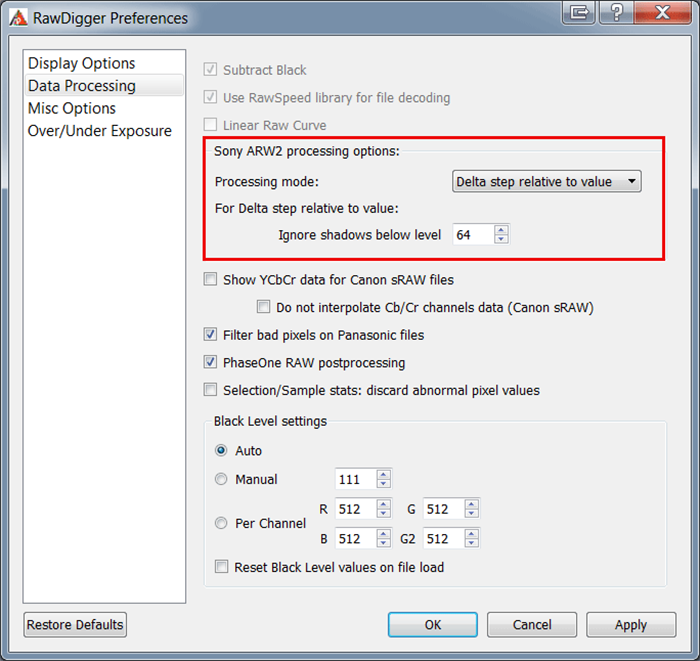

To detect the problematic areas in images captured in Sony cRAW format, RawDigger, starting with the version 1.0.5, offers a special unpacking and display mode, which can be switched on with:

Preferences – Data Processing – Sony ARW2 processing mode – Processing mode: Delta step relative to value

Figure 3

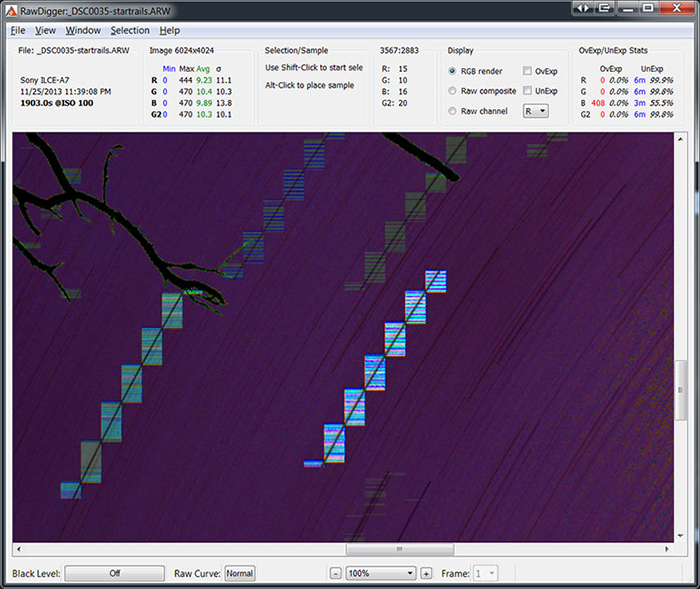

In this mode, instead of a regular procedure of data unpacking, we calculate the degree of posterization, that is, the ratio of the minimum variation of data (data step) in the 16-pixel block to the value of the pixel. This ratio is in per mil (1/1000, ‰). Consequently, the ratios of several tens per mil (that is several percent) may be visible if the background is a smooth gradient. Several hundreds per mil (that is several tens of percent) will certainly be visible if the background is smooth and featureless.

Here is the same area of the star trails shot displayed in Delta step relative to value mode:

Figure 4

As you can see, the areas containing artifacts can be easily detected. These areas may exhibit posterization on the final image.

With a little effort we can make this mode even more usable.

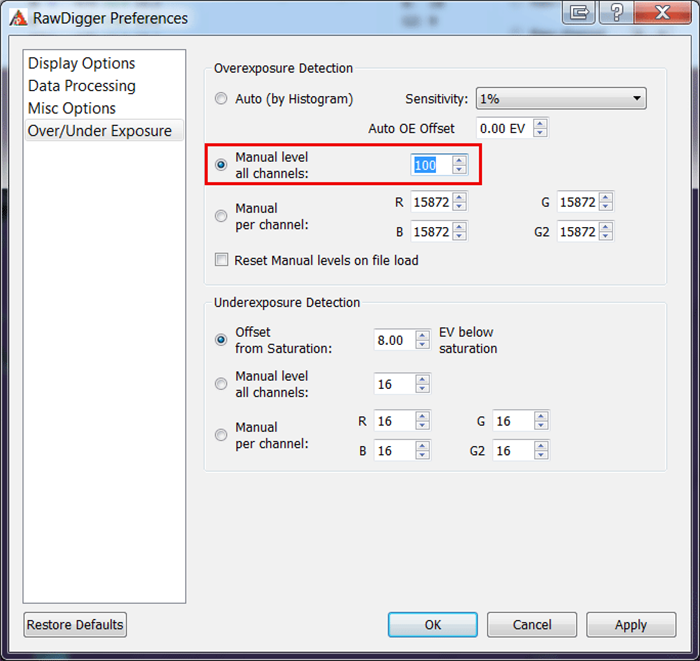

Suppose we want to select the areas where the degree of posterization is 10% (100‰). To do so in RawDiggerer Preferences – Over/Under Exposure we set the Manual level all channels in Overexposure Detection group to 100:

Figure 5

Switching on the indication of Overexposure (check mark in OvExp) we will see a red overlay over the areas where the degree of posterization is higher than 10%:

Figure 6

In this mode, the areas of potential posterization are displayed over the whole image. However, on the final image, posterization will be visible only over smooth and featureless backgrounds like sky, sometimes eye whites, and flat shadows. In all other cases, the only effect of this posterization is an error in local color reproduction. There is very little chance that this error will be discernible.

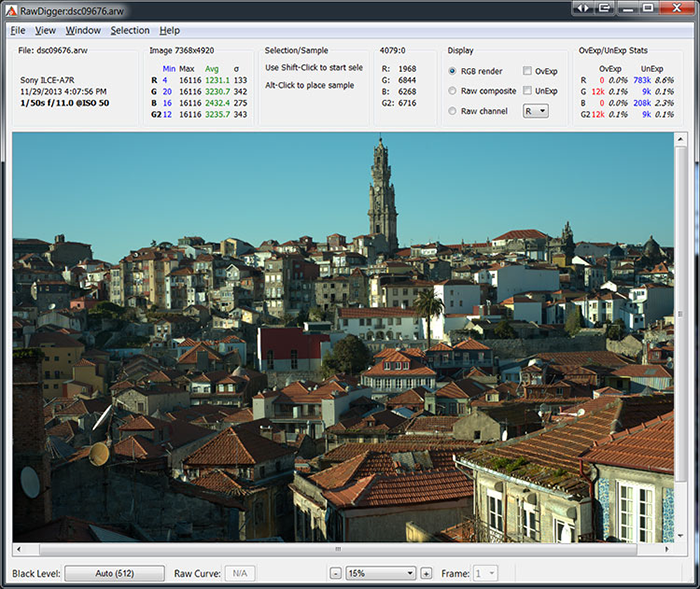

Let’s take a look at the following shot (from Quesabesde's Sony A7R analysis page):

Figure 7

All of the hard contrast edges exhibit some artifacts where one can suspect posterization:

Figure 8

However, on the final image the posterization is visible only on the edge of the tower where the background is a featureless blue sky.

We will use this shot to demonstrate an additional setting, which helps to exclude the lowest tone values (deep shadows) from the posterization map.

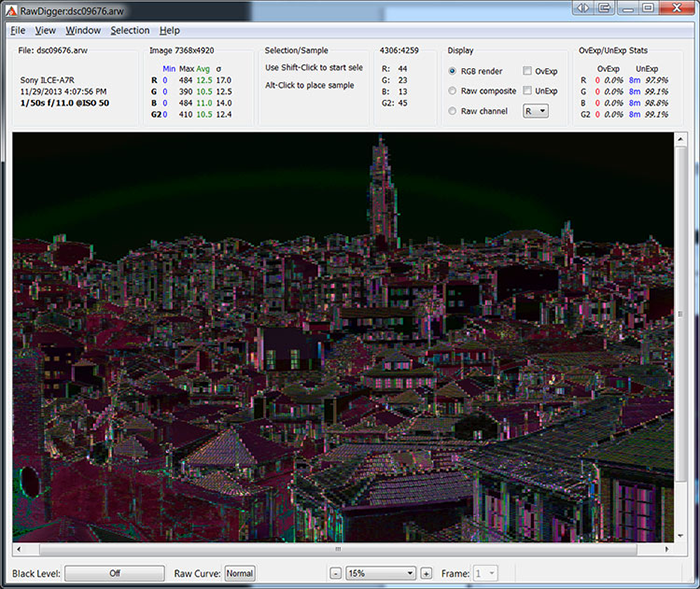

Deep shadows always contain hidden, but substantial posterization because only a few levels are present there. Even if the encoding is lossless, the minimal step in the shadows of a cRAW file is equal to 2. This means that if the level is 64, the degree of posterization is more than 30‰ (2/64 = 3.125%).

In order to keep the display of the image cleaner, you can use the Ignore shadows below level NN setting. This setting is active only together with Sony ARW2 Processing – Delta Step relative to value.

If this setting is turned off (that is, the value in the field is set to zero), the scene will show additional posterization in the shadows on the foreground:

Figure 9

Inside SONY cRAW format

Sony cRAW files are packed and unpacked as following:

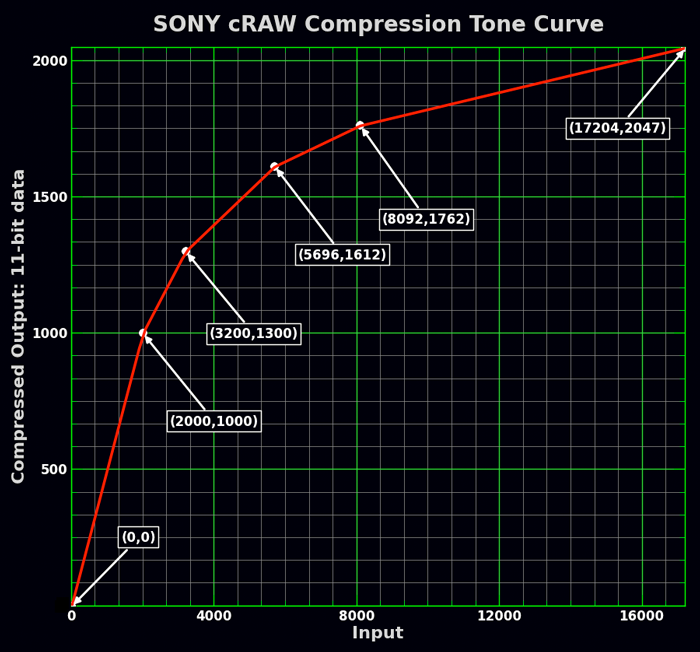

- Data coming from the sensor is biased by the black level (for example, for Sony A7r camera black level is 512 and this value is added to all the data) and is compressed through the tone curve equivalent to the one below; the compressed result contains 11-bit values.

Figure 10

- Each data row (a row has the height of 1 pixel) is split into 32-pixel chunks, each chunk containing interleaved pixels from 2 color channels. For odd rows a chunk contains 16 R-pixels and 16 G-pixels (RGRGRG…), for even rows it contains 16 G-pixels and 16 B-pixels (GBGBGB…)

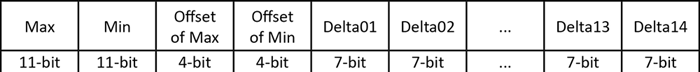

- The structure of each 16-pixel block is:

Figure 11

Here, Max is the maximum pixel value in the block, Min is the minimum value in the block, Offsets are the positions of pixels with Max and Min values relative to the block start

Deltas are calculated as:

DataSpan = Max – Min

Step = 2rounding(log2(DataSpan/128))

Delta = (PixelValue – Min) / Step (see more on this below)

The length of the block is 2*11+2*4+14*7=128 bits, or 16 bytes. Thus the raw data compressed per cRAW scheme effectively uses 1 byte per pixel; but the total ARW2 file size is slightly larger as it contains not only cRAW data, but also metadata and JPEG preview.

The larger the data span in a 16-pixel block is, the larger the step is, which is multiplied by the delta value to restore the pixel value, and consequently the larger the data step is. For a data span of less than or equal to 128, the factor is 1, and no rounding error is introduced while reversing the delta encoding. For a larger span, the scheme does introduce the rounding error, which may lead to posterization. In other words, if the chunk of 32 pixels spans across an area that contains a large variation in brightness, the data in the block is not exact, but is only an approximation.

|

Data span less than |

128 |

256 |

512 |

1024 |

2048 |

|

Step |

1 |

2 |

4 |

8 |

16 |

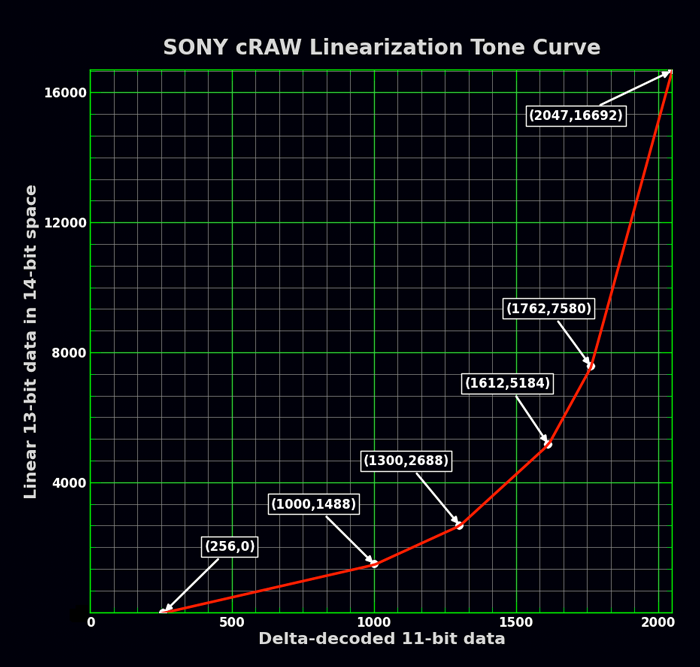

- During the raw conversion, first, the delta encoding is reversed, next, the linearization tone curve (contained in the metadata of Sony ARW2 files) is applied to decompress the data, and finally the black level (also taken from metadata) is subtracted from the result.

After black level subtraction Sony cRAW linearization tone curve is effectively this:

Figure 12. Sony cRAW tone curve with black level being subtracted

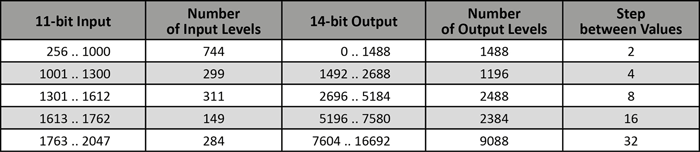

Or, in a form of a table:

Figure 13

Consider the star trails shot once again:

Figure 14

Inspecting the green channel, we can see that the RAW values in the proximity of the track (black level is subtracted) are:

- The star trail proper has pixel values that reach as high as 4080

- The sky around the trail is a smooth featureless background, and the pixel values close to the trail range from 80 to 200.

Applying compression tone curve to this data:

- The maximum value is 1474 (compression applied to 4080)

- The minimum value is 296 (compression applied to 80)

Data span = (max – min) = (1474 – 296) = 1178.

In order to code values up to 1178 in 7 bits we need to set the step to 16: 2ceiling(log2(1178/128)) = 16.

In other words, for a data span of 1178 the nearest larger number that is an exact power of 2 is 2048 = 211. Dividing 2048 by 128, we have the step of 16.

The delta values during the decompression are multiplied by this step and further decompressed through the curve which you can dump using RawDigger. In our example, after performing decompression through the tone curve the step of 16 becomes 32, that is ≈14-30% of the values characteristic to the background. Naturally with such a crude step posterization becomes plainly visible.

Other Modes under ARW2 Data Processing

Since we have already discussed cRAW/ARW2 format, it is easier to understand the other options under Sony ARW2 Data processing:

- Standard processing – regular unpacking of RAW data

- Only base pixels – those pixels where the precise 11-bit values are coded (min and max in the block) are unpacked, while for all other pixels (Delta pixels) are set to zero.

- Only delta pixels – Delta pixels are unpacked, and adding the minimum value restores their values, after that the base pixels are set to zero.

- Delta pixels relative to zero – same as previous setting but minimum values are not added to Delta pixels.

- This mode is also, like the one below, useful for determining the area where the posterization may occur. However now we have added more tale-telling mode, that is the one below.

- Delta step relative to value – this mode was used throughout the whole article.

This article in PDF format: RawDigger_Detecting_Posterization_in_Sony_Camera.pdf

The Unique Essential Workflow Tool

for Every RAW Shooter

FastRawViewer is a must have; it's all you need for extremely fast and reliable culling, direct presentation, as well as for speeding up of the conversion stage of any amounts of any RAW images of every format.

Now with Grid Mode View, Select/Deselect and Multiple Files operations, Screen Sharpening, Highlight Inspection and more.

75 Comments

mr Kasson ( of blog.kasson

Submitted by n/a (not verified) on

mr Kasson ( of blog.kasson.com ) noted that in both continuous mode and exposure bracketing mode Sony A7/A7r are less bits (that is before curve/compression applied to the signal/data) than in a single shot mode... true ?

The data format is the same

Submitted by lexa on

The data format is the same for all modes (11 bit 'base' values, 7-bit 'deltas', 11 to 14 bit tone curve).

So, lower 'bitcount' may be visible by gaps in linear RAW histogram. I'll try to test it tomorrow.

well, Kasson does say that

Submitted by n/a (not verified) on

well, Kasson does say that single shot is different from rapid shots modes... case in point the sensor in E-M1/GH4 - in silent electronic shutter mode (implemented only in Panasonic cameras) it is 10bit output... normal shutter 12bit... from http://www.semicon.panasonic.co.jp/ds8/c3/IS00006AE.pdf

I did not mean the data

Submitted by n/a (not verified) on

I did not mean the data format itself of course... I mean content which is compressed/encoded.

Technically, the curve is

Submitted by Iliah Borg on

Technically, the curve is implemented in analog domain, that's why "bits" are not the exact term to be used; and that's why we used the word "equivalent" for the curve on fig.10. In some Sony cameras modes other than the single shot indeed result in what effectively is 1 bit less, with larger step along the same curve.

--

Best regards,

Iliah Borg

Thank you Iliah. Kindest

Submitted by Raul (not verified) on

Thank you Iliah. Kindest regards.

Dear Raul,

Submitted by Iliah Borg on

Dear Raul,

Our pleasure ;)

RawDigger is Alex and I, and this article is no exception ;)

--

Best regards,

Iliah Borg

> Technically, the curve is

Submitted by n/a (not verified) on

> Technically, the curve is implemented in analog domain

you stated yourself that there is no direct test for that - only a logical assumption...

No conclusive test with a

Submitted by Iliah Borg on

No conclusive test with a camera as an object that I can come up with, for sure. But there are other ways of getting necessary information.

--

Best regards,

Iliah Borg

Histogram

Submitted by Matti Remonen on

Why is there no visible gaps shown on (RawDigger) histogram even though there should be because of the linearization?

For Sony A7: switch histogram

Submitted by lexa on

For Sony A7: switch histogram to linear mode, set bin size to 1 (you'll need to select some narrow part of whole range) and you'll see gaps very clear.

Ok, thanks. I'll check this.

Submitted by Matti Remonen on

Ok, thanks. I'll check this. I tried with linear mode, but probably left the bin size too large.

And this should work with all compressed Sony RAW's (I own NEX6 & 5N)?

The tone curve (decompression

Submitted by lexa on

The tone curve (decompression curve) is the same for all Sony cameras uses cRAW format (all NEX, all SLT, All RX*, A7/A7R, and latest DSLR-Axxx).

A7(R), SLT-A99 and RX1(R) cameras are really 13-bit in shadow part (below level 1488 after decompression and bias subtration): only even bins are non-zero, while odd bins are zero (to see this clearly you need to examine small selection. On the entire image the picture is not so clear because of possible post-processing, such as vignetting compensation)

Other cRAW cameras (NEX, RX100, remaining SLT models) are 12-bit in shadows: only one bin of 4 is non-zero.

Here is the post in my blog (in Russian) with all Sony models bit-count table: http://blog.lexa.ru/2014/01/25/o_bitnosti_u_kamer_sony.html

Also, please note, that noise-reduction is performed after ADC, so histogram patterns are not so clear if noise reduction is turned on.

And actually this was

Submitted by Matti Remonen on

And actually this was explained in the Histograms part 3-blog. Should've tried the RTFM-method before asking... :)

DNG file sizes

Submitted by M (not verified) on

Is this the reason that DNG files converted from my A7 ARW files turn out bigger (going from lossy to lossless compression)?

ARW is ~25 MB, DNG is ~30 MB. I embed a medium sized JPEG preview.

Yes. Sony ARW2.3 data is

Submitted by lexa on

Yes. Sony ARW2.3 data is exactly 1 byte per pixel (plus EXIF data and JPEG preview).

DNG files are 14-bit compressed. The data size depends of compression ratio, so on image characteristics (noise level, signal level and so)

Hmm, thanks for your reply. I

Submitted by M (not verified) on

Hmm, thanks for your reply. I just got a slightly different result converting 1262 ARW files from the A7.

ARW: 31.51 GB

DNG: 33.31 GB

The reason seems to be that some DNG files go down as low as 14 MB. Hurts me to see that Sony is actually wasting 10 MB per file by using LOSSY compression on these files.

Could we hope that there will be a firmware upgrade that gives us a lossless option? I guess not...

Sony 'compression' is very

Submitted by lexa on

Sony 'compression' is very optimized to high speed read/write, while DNG's Lossless JPEG is not.

Sony 'compression' is very

Submitted by lexa on

Sony 'compression' is very optimized to high speed read/write, while DNG's Lossless JPEG is not.

I found another interesting

Submitted by M (not verified) on

I found another interesting note about ARW files converted to DNG here:

http://www.fredmiranda.com/forum/topic/1255693/0#11970379

The poster says that files with embedded barrel distortion correction are linearized when converted to DNG. Could this really be true?

You can easily try yourself:

Submitted by lexa on

You can easily try yourself: convert RAW shot with visible distorsion to DNG and compare the files in RawDigger. To view files overall look you may use unactivated/expired trial version.

this was a situation once

Submitted by n/a (not verified) on

this was a situation once when Panasonic introduced software optics correction in their raw files and Adobe (DNG standard) did not have any support yet... so for a while (quite long) Adobe DNG converter did convert .RW2 to linear .DNG , but then new DNG spec was issued, Adobe DNG converter updated.... so it might be that the issue is that he tries to convert using the settings to make converted DNG compatible with the old DNG standard verion ? just a guess...

A year later but just to

Submitted by n/a (not verified) on

A year later but just to confirm for reference: Adobe's DNG converter linearises ARW-files only if the targeted compatibility is set to DNG 1.2. If set to 1.3 or 1.4 (default), it adds the embedded lens correction as 'WarpRectilinear' Opcode in the DNG-file and leaves the image data as CFA-data with lossless Huffman JPEG compression. (tested with A7)

The most important piece of information is missing

Submitted by Ilya Zakharevich (not verified) on

Alex, thanks for a very enlightening discussion! One remark:

Given a quantization method, to analyze the situation with posterization, one must know one number, and one number only: the ratio of the quantization step S to the noise σ which dithers the pre-quantization signal. With star trails, your discussion boils down to the quantization step being 32 (in 14-bit linear units); however, you absolutely ignore the question of the noise.

Fortunately, with the image you discuss, it looks easy to pick up a rectangle which almost touches the “bad” rectangles, and is “visually homogeneous”; one about 10×10 looks like not being a problem. Could you report the average and the noise of 4 channels in such a rectangle (in 14-bit linear units)? (Comparing two such rectangles may give a hint of whether this method of measuring noise is ”obviously faulty”.)

[In your Figure 4, for the trail slightly right of the center: I think that the “corner” of pinky area left of the trail between two large “bad” rectangles is a suitable candidate for such a measurement.]

The sigma value is about 12

Submitted by lexa on

The sigma value is about 12 in Green channel, so step/sigma is about 3.

Step/sigma=3 means there should be no posterization

Submitted by Ilya Zakharevich (not verified) on

Maximal possible posterization with S/σ=3 is (IIRC) about 0.7% of the quantization step (assuming normal distribution of the noise). So there may be absolutely no posterization visible (even with dumb dequantizers).

However, “noise modulation” — meaning that post-dequantization noise depends on where exactly the average signal was sitting between quantization levels — may be discernible (again, assuming dumb dequantizer). Moreover, the region where S/σ is between 3 and 4 is “the critical region” — the relevant numbers change very quickly there; so I must rerun my simulations with exact number 32/12! BTW, what are average values in these channels (and noise in R,G2,B)?

Thanks, Ilya

I do not understand.What is

Submitted by lexa on

I do not understand.

What is 'Maximal possible posterization', how do you calculate it?

What is posterization?

Submitted by Ilya Zakharevich (not verified) on

I checked the WikiPedia article, and it contains complete BS. No wonder people do not know what posterization is! (I think I saw nice explanation on a page of some Berkeley guy; will try to find it).

Anyway: assume that quantization step is 1; consider the case of signal X modulated by random (input) noise; this enters a dumb quantizer, which rounds it to the nearest integer. You get an integer-valued random variable ξ. Essentially, the output of quantizer is s(X) ≔ E(ξ), the average value of the output; this output is modulated by a certain noise, denote its magnitude by Σ(X). One can also average Σ(X) over possible values of X (for example, [0,1]); denote the averaged noise by Σ₀.

• The posterization is s(X)−X.

• The noise modulation is Δ(X) ≔ Σ(X)−Σ₀ (relative to Σ₀).

Assuming the input noise is normally distributed with variance σ, the functions E, Σ and Σ₀ acquire an extra argument σ. In such a case, calculating functions s and Σ is just a simple summation involving erf(). (The reason for the name “noise modulation”: consider a slow gradient as an input; then noise will “wave” between stronger and weakier as the gradient progresses between exactly quantizable values.)

In general, s(X) is below X for X∈[0,½], and above X for X∈[½,1]. If posterization is large (meaning σ<<1), then s(X) is very close to round-to-closest-integer function; about σ=1/5, s(X) starts to look more like X, and near σ=⅓, it is practically indistinguishable from X. ASCII art for s(X,σ=¼):

1 |'''''''''''''''''''''''''''''''''''''''''''''''''''''''''__xx""

| __x"" |

| _x" |

| x"" |

| _x" |

| x" |

| x" |

| x" |

| x" |

| x" |

| _" |

| _" |

| _x |

| _x |

| _x |

| _x |

| _x |

| _x" |

| __x |

| _x" |

| __x"" |

0 __xx"",,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,

0 1

Even if the pass-through function s(X) is quite close to X, the differences in ds/dX at different values of X may still lead to certain defects of the image. (The difference of local contrast in almost-flat-color areas at 2 brightness levels — exactly quantizable ones, and exactly-in-between.)

BTW: I do not know any experiments about what the eye will be able to see first: variation in ds/dX, or the absolute shifts s(X)−X.

BTW, since I’m already long: I think that it is nice to mark the different strategies of dequantizers like this:

• Dump: would just return the integer Y fed to it;

• Dithering: returns the integer Y modulated by random noise (chosen according to predicted value of σ);

• Adaptive: would inspect the neighbor pixels and calculate δ=Average(Neighbors)−Y, and would:

(1) add a tiny bias depending on δ;

(2) add (tiny!) random noise depending on δ.

Dithering dequantizers provide only minuscule advantage over dumb ones:

• First of all, one needs a pretty strong noise to mask the noise modulation (Σ(½) is always ≥½);

• Second, the processing pipeline usually includes some kind of denoising step; so if the target of output dithering is hiding the difference s(X)−X, this hiding will disappear later in the pipeline.

The idea of adaptive dequantizers is based on the fact that Average(Neighbors) is also posterized, but hopefully, by a smaller amount than Y itself. Then δ is strongly correlated with X−Y, and adding an appropriate (tiny!) bias and noise would cancel posterization and noise modulation. (Unfortunately, for really small values of σ, the posterization of Y and of Average(Neighbors) will be practically the same: s()=round-to-integer(). For example: for closest neighbors, this strategy is not viable for about σ≤1/7.

Why name ”adaptive”? Obviously, in the Average(Neighbors) taken above one should omit the neighbors with value outside [Y−1,Y+1]. So the algorithm “adapts” itself to almost-flat-color regions, and switches itself off on regions with large variation of the signal.

Simulation

Submitted by Ilya Zakharevich (not verified) on

[Actuallly, since quantization is applied to δ of the signal, the average value is irrelevant! So one should be able to proceed to analysis with the only info being S=32 and σ=12.] Below, I assume normal distributin of noise — as far as I understand physics of transistors, the read noise must have a lot of contribution from outliers; however, with σ=12, the read noise should be completely overwhelmed by the drop noise — which is almost normal.

Assuming that about 6 out of 16 data points in a block are too bright (contribution of the star trail), the minimum is taken over about 10 of non-trail points. Min(10 independent normal random variables) has 25%, 50%, and 75% percentiles at -1.9σ, -1.5σ, -1.14σ. Therefore, in a block, the “average sky” is about 18±5 units above the minimum.

Assume that during quantization, switches between levels happen at half-integer multiples of the quantization step + ε; so 0…16 goes to 0, 18…48 to 32, 50…80 to 64, etc (odd values are irrelevant with A7). Then the average value of 17 is “the most shaky one”; it would have the maximal post-dequantization noise with σ'=16. (Most of the time, it would quantize to 0 and 32; quantization to 64 would require boost by noise by 50-17=33=2.75σ ⇒ probabily at one data point=0.3%; so on 97% of blocks, only 0 and 32 will be seen.)

IIRC, humans can detect noise modulation of about 40%. Here we have noise modulation of 16 vs 12, which is 33%. So, noise modulation (even with dumb dequantizers) has quite a low chance to be visible.

With noise at 12/32 of the quantization step, maximal posterization is ±1.2% of the quantization step (happens at “level between steps” being 75% and 25% correspondingly). Maximal “relative posterization” is 12%. (All this assumes dumb dequantizer, which just emits multiples of 32 without any extra dithering.]

[Explanation: the input signal with the average of 24 (which is 75% of 32) will have average value of 24+32*0.012≈24.4 after dequantization. Writing this as s(24)=24.4, the relative posterization describes ds/dx, which is 0.88 at the x=“switch level”=17.]

———————————————————————————————————

Summary: theoretically, for the image in question, the Sony quantization scheme is sound; it should not cause any visible artifacts. The visible artifacts are either bugs in dequantizers (this is what I strongly suspect), or related to some unknown “cooking” of the signal from the sensor before it hits the δ-compressor.

Ilya

I agree, simple adding noise

Submitted by lexa on

I agree, adding noise proportinal to delta step will hide the problem in this case.

The problem is in RAW converter, not in ARW2

Submitted by Ilya Zakharevich (not verified) on

It is nice to agree with things I did not say, isn’t it?

If you would really agree with what I said, you would look for problems in RawDigger, not in ARW2. As far as I can see, you need to subtract a small bias (and not noise; but proportional to delta step indeed!), since it is very probable¹⁾ that your expectations about how the camera’s compressor rounds up/down 11-bit values to 7-bit deltas are wrong. A mismatch of such expectations creates a bias in dequantizer.

¹⁾ As I reported elsewhere, in the results of RawDigger, the average values in high-δ-step areas are slightly higher than average values in nearby low-δ-step areas.

What should be the value of the bias? I do not know (since your result-JPEG-images are not calibrated); but it should be very easy to fine tune the bias: for the correct value of the bias the beards near star trails should disappear (as they did in Jim Kasson simulations, when he corrected his, similar, bug).

RawDigger is RAW analysis

Submitted by lexa on

RawDigger is RAW analysis tool, it is not RAW converter.

It should unpack data *as is*, without any modifications, adaptive denoising, adaptive bias subtracting. and so on. The goal is to get data 'as RAW as possible'.

All things you wrote about are for raw converters, to get more pleasing look.

One correction to the simulation

Submitted by Ilya Zakharevich (not verified) on

In my explanation, I busted the sign: 12% relative posterization means that ds/dx=1.12 (and not 0.88 as I wrote before). Sorry!

Alex, I cannot find the RAW

Submitted by Ilya Zakharevich (not verified) on

Alex, I cannot find the RAW file you discuss here. Is it publicly available?

Moreover, are you sure that your explanation of the compression algorithm does not contain 1-off error? I have no idea how to encode 11-bits values 0,0,0,0,0,0,0,0,0,0,0,0,0,0,128,128 using this algorithm…

Ilya

I've receive the file from

Submitted by lexa on

I've receive the file from Diglloyd correspondent. I've not asked for permission to distribute the file or correspondent's address disclosure. You may contact Diglloyd.

the 0,0,....128,128 is not a problem:

two 11bit min/max values (so 64 before curve)

two 4bit coordinates (0E and 0F)

and 14 zeroes.

The minimum delta step is 1, not 0.

Coding 0,0,....128,128

Submitted by Ilya Zakharevich (not verified) on

… 14 zeros is not where the problem is; what are the NEXT two numbers? How do you code the last two 128s? Max you can do with delta=1 is 127.

And your “two 4bit coordinates (0E and 0F)” does not match your description (or dcraw.c). The first one must be in 0...13, the second in 14...15.

[And thanks for clarification about the image. I started to suspecting senility and a rot of my google-fu that I could not find the source file anywhere!]

Yes, you're right. Min value

Submitted by lexa on

Yes, you're right. Min value is 0, min coordinate is something (0..14), max coordinate is 0F or 0E, max value is 64, non-zero delta-pixel is 64 too (because curve slope is 2x).

Your description of compression does not match dcraw.c

Submitted by Ilya Zakharevich (not verified) on

OK, I double-checked with this obfuscated LoC in dcraw.c, and (as far as I can deobfuscate it — apparently the author does not believe in comments), it does NOT match your description. As expected, max-min=128 is decoded with step=2, not step=1.

Ilya

My description is for linear

Submitted by lexa on

My description is for linear data (before tone curve compression), but your is for tone compressed data.

Yes, if tone compressed data are 0,0,0,...128,128, then delta step becomes 2.

Yes, if tone compressed data

Submitted by Ilya Zakharevich (not verified) on

This is not what your algorithm says (look for ceiling()), and not what your table says (look for “less”).

BTW, this bug not very interesting (will hit very few pixels per image). However, note that the bug I’m always hinting at (that the decompressor makes wrong assumptions about which kind of rounding the compressor had used) is EXACTLY of the same nature. Just a difference between floor() ceiling() round() etc.

Thanks, table heading

Submitted by lexa on

Thanks, table heading definitely need to be corrected, while RawDigger/dcraw/RawSpeed code are not.

Sony A7II still compressed raw files 11+7bit?

Submitted by Ron (not verified) on

Hi Lexa,

do you know if sonys a7II has still the compressed raw files or diid they fix this issue?

Hello,

Submitted by Iliah Borg on

Hello,

a7II is using the same compressed raw format as a7.

--

Best regards,

Iliah Borg

firmware

Submitted by Jon (not verified) on

Basic question--and apologies if I missed the answer above--if they so desire, can Sony fundamentally change/improve the current lossy compression of raw data with a firmware update?

thanks

Techincally, it should be

Submitted by lexa on

Techincally, it should be possible (unless RAW 'compression' is built in chip, not in firmware).

But this change will break compatibility with RAW processing software.

DNG conversion of Sony RAW files

Submitted by msandman1943 (not verified) on

The comment about DNG conversions being larger than the original file caught my eye. I don't have a deep understanding of the technical aspects on Sony's compression algorithm, but at the risk of asking an obviously stupid question, is conversion to DNG a potential work-around to avoid or reduce posterization along high-contrast borders?

In ARW files data is already

Submitted by lexa on

In ARW files data is already lossy compressed, so there is no way to recover already lost values.

It is possible to simulate such values in DNG converter (or in RAW converter) by adding some artifical noise (dithering). Not sure if any DNG or RAW converter really does it.

how to count out the number range 14-30%

Submitted by Chen Chen (not verified) on

why:

after performing decompression through the tone curve the step of 16 becomes 32, that is ≈14-30% of the values characteristic to the background.

i mean how you calculate then get 14-30% of the values characteristic to the background.(the mathematical algorithm behind is?)

Because:

Submitted by lexa on

Because:

32 is ~1/3..1/7 of ~80..~200

is 32 means that 1 step of grayscale use same value

Submitted by Chen Chen (not verified) on

is 32 means that 1 step of grayscale use same value because of data step between the decompressed value is 32?

32 is so large than 1 so that it show up(posterization) , not because of some pixels value lift(am i right?)

Pages

Add new comment